If you’ve spent any time exploring artificial intelligence tools lately, you’ve probably noticed something. The landscape isn’t just growing—it’s exploding. Every month brings another headline about a new language model that’s faster, smarter, or better at some specific task.

One day you’re reading about Claude’s thoughtful reasoning. The next, you’re hearing GPT-5 can write code in seconds. Then someone tells you Gemini just crushed a benchmark in multilingual tasks.

It’s exciting, sure. But it’s also overwhelming. Which model should you use? Do you need separate subscriptions for each one? What if the perfect answer to your question lives in a tool you’re not even using today?

That’s where T3 Chat steps in to save the day.

If you’ve ever wished you could sample the best of what multiple AI models have to offer without the hassle of juggling tabs, accounts, or billing cycles, you’re in the right place. T3 Chat makes multi-model exploration not just possible—but genuinely practical.

The Multi-Model Reality We’re Living In

Let’s be honest. No single AI model does everything brilliantly. Claude might excel at nuanced dialogue and careful reasoning. GPT models bring speed and versatility across general tasks. Gemini shines in multilingual contexts and complex data synthesis. DeepSeek delivers impressive performance at a fraction of the cost. Each has strengths. Each has trade-offs.

Recent research confirms what many users have suspected: combining outputs from multiple models can improve results. That’s not just theory. It’s practice.

Developers and businesses are already using multi-LLM strategies to boost accuracy, creativity, and reliability. But for the average user, accessing those models separately is a logistical nightmare. You’d need multiple accounts, multiple payment methods, and multiple browser tabs just to compare a simple prompt across platforms.

T3 Chat cuts through that friction. Instead of forcing you to become a subscription manager, it gives you a single interface to experiment with different AI personalities and capabilities. You ask once. You get multiple perspectives. You choose what works best.

What Makes T3 Chat Different

At its core, T3 Chat is designed around flexibility and simplicity. You’re not locked into one model. You’re not stuck in a walled garden. You’re free to explore, compare, and switch as your needs evolve.

Here’s what that looks like in practice.

T3 Chat is One Platform, Multiple Models

T3 Chat brings together some of the most capable language models available today. You can switch between Claude, GPT models, Gemini, and others without leaving your workspace. No need to remember which site hosts which AI. No need to re-enter your question three times. Just pick your model, ask your question, and compare results if you want to.

T3 Chat Has Transparent Pricing That Actually Makes Sense to Most Users

One of the biggest headaches with multi-model usage is cost. Some platforms charge per seat. Others bundle features you don’t need. T3 Chat keeps pricing straightforward. You pay for what you use, and you can see exactly where your tokens are going. That makes budgeting simple, whether you’re a solo user or a small team.

Built for Real Work, Not Just Demos

T3 Chat isn’t just a novelty playground. It’s built for people who need reliable AI assistance day after day. That means fast responses, stable performance, and the ability to handle everything from brainstorming sessions to complex technical queries.

You can use it for research, content creation, code review, language translation, or just exploring new ideas. The interface adapts to what you’re doing, not the other way around.

No AI Vendor Lock-In

When you build workflows around a single AI provider, you’re betting your productivity on their roadmap, their uptime, and their pricing decisions. T3 Chat reduces that risk. If one model isn’t cutting it for a particular task, you can pivot instantly. That kind of agility matters more than ever in a space where model capabilities are evolving weekly.

When Multi-Model Access Actually Helps

You might be wondering: do I really need more than one AI? For many tasks, the answer is no. A single capable model will handle most everyday questions just fine. But there are moments when having options changes everything.

Imagine you’re drafting a technical explanation and you want to make sure it’s clear, accurate, and engaging. You could ask Claude for a thoughtful breakdown, GPT for a punchy summary, and Gemini for a version optimised for non-native English speakers. Then you pick the best elements from each.

Or maybe you’re stuck on a tricky coding problem. One model suggests an elegant solution but misses an edge case. Another flags the edge case but overcomplicates the code. A third offers a middle path you hadn’t considered. Suddenly you’re not just getting an answer—you’re getting perspective.

These aren’t hypothetical scenarios. They’re happening right now. Teams are experimenting with multi-LLM workflows to improve decision-making, reduce errors, and unlock creative breakthroughs that wouldn’t emerge from a single source.

Practical Use Cases for T3 Chat

Here are some ways people are already using T3 Chat to make multi-model access work for them.

Research and Learning

If you’re digging into a new topic, getting multiple AI perspectives helps you triangulate the truth. One model might give you a high-level overview. Another dives into the technical details. A third connects the dots to adjacent fields you hadn’t thought about. T3 Chat makes that exploration seamless.

How to use multi‑LLM research effectively with T3 Chat

- Start broad, then go deep. Ask one model for a plain‑English overview, then send the same prompt to a second model for domain‑specific terminology and references.

- Prompt a third model to challenge assumptions or list counterarguments.This mirrors critical synthesis workflows discussed in guides like the analyst‑focused approach to literature reviews in the PRISMA methodology, adapted for AI-assisted work.

- Cross‑verify facts.Request citations or “show your working” steps. Some models can outline their reasoning or provide links to primary sources.When they do, click through to confirm accuracy and look for converging evidence from reputable outlets.

- Use contrast prompts. Ask models to explain the same concept to a designer, a developer, and a C‑suite stakeholder.The differences reveal gaps, jargon traps, and the most transferable framing for your audience.

- Build a living brief.Save the strongest explanations, definitions, and examples, then ask another model to reconcile conflicts and produce a short, source‑aware summary. This creates a defensible knowledge base you can refresh as the field evolves.

Content Creation

Writing is iterative.

Sometimes you need a punchy hook that lands in seven words; other times you need a layered explainer that earns trust with nuance.By testing the same brief across multiple models in T3 Chat, you can audition different voices, compare structures, and spot the version that actually resonates with your audience.

For practical patterns, explore this concise guide to multi‑model prompting and contrast it with audience‑first principles from Content Design London to keep outputs readable and purposeful.It also reduces risk.

No single model nails every brief or domain, so cross‑model prompting helps you triangulate clarity, accuracy, and style in one pass—snappy headline from one, tighter structure from another, deeper context from a third.

Recent evaluations of ensemble prompting show measurable gains in factuality and coherence, while newsroom policies such as the BBC’s guidance on using generative AI in journalism outline how to verify claims and sources.

When the outputs converge, you’ve found an angle worth shipping; when they diverge, you’ve learned exactly what to refine next.No more guessing which AI will “get it” on the first try.

Code Assistance

Different models have different coding strengths.One might generate clean Python faster, another might be superb at untangling asynchronous JavaScript, and a third might shine at explaining trade‑offs in plain English.

With T3 Chat, you can run the same prompt across models and compare outputs side by side, pulling the best ideas into a single solution.

When you’re shaping prompts, lean on proven tactics from a practical playbook on writing effective prompts for developers and cross‑check fixes with language‑specific guidance such as the official Python debugging tutorial and Mozilla’s JavaScript debugging guide to keep your workflow reliable.

When you hit a wall, treat each model like a specialist on call.

Ask one model for a minimal reproducible example, another for a step‑through explanation, and a third for tests that catch edge cases—then merge the strongest pieces.

For deeper reliability, explore techniques like test‑driven development with this concise primer on TDD in Python and use linters and formatters recommended in the Python code style guide and the ESLint documentation for JavaScript.

If you’re comparing algorithmic approaches, consult well‑maintained references like the Python time complexity notes and the V8 blog’s insights on performance to validate trade‑offs before shipping.

Language and Translation

If you work across languages, having access to models trained on diverse datasets matters.T3 Chat lets you route the same prompt to different engines so you can compare clarity, fluency, and cultural fit side by side.

For foundational guidance on building multilingual content that actually works, see Google’s international SEO best practices and the W3C’s internationalisation overview for language tagging, locale, and character handling.

If you’re evaluating translation quality, the industry‑standard MQM rubric and the COMET metric offer practical ways to assess accuracy, fluency, and adequacy—useful when deciding which model to trust for specific language pairs.

Some models excel at specialised tasks: Gemini is often highlighted for multilingual reasoning, while others may offer stronger terminology control or formality handling in specific locales.

For hands‑on techniques, review tips for prompt engineering in multilingual contexts and apply glossaries or style guides to keep terminology consistent across variants like en‑GB and en‑US.

When nuance really matters—legal, medical, or brand voice—combine machine output with human‑in‑the‑loop review and reference resources such as the EU’s IATE terminology database and Microsoft’s style guides to standardise tone and terms across markets.

T3 Chat gives you the flexibility to compare, calibrate, and select the best‑fit output without switching platforms.

The Bigger Picture: Why Model Diversity Matters

The AI landscape is moving fast. Just this month, we’ve seen new benchmarks that expose how different models handle reasoning, updates to ensemble techniques that improve multi-model collaboration, and new platforms designed specifically for multi-LLM workflows.

What does that tell us? It tells us that relying on a single AI isn’t just limiting—it’s risky. Models evolve. Companies pivot. Pricing changes. Features get deprecated. When you’re tied to one provider, you’re at their mercy.

T3 Chat flips that dynamic. You stay in control. You choose the tool for the job. You adapt as the space evolves.

| Feature | Single-Model Platform | T3 Chat Multi-Model Platform |

|---|---|---|

| Model Variety | One option | Claude, GPT, Gemini, and more |

| Switching Cost | High (new account, new workflow) | None (same interface) |

| Pricing Structure | Often per-seat or bundled | Pay-per-use, transparent |

| Vendor Lock-In | Yes | No |

| Comparison Capability | Manual copy-paste across tabs | Built-in side-by-side |

What’s On Offer: A Quick Tour

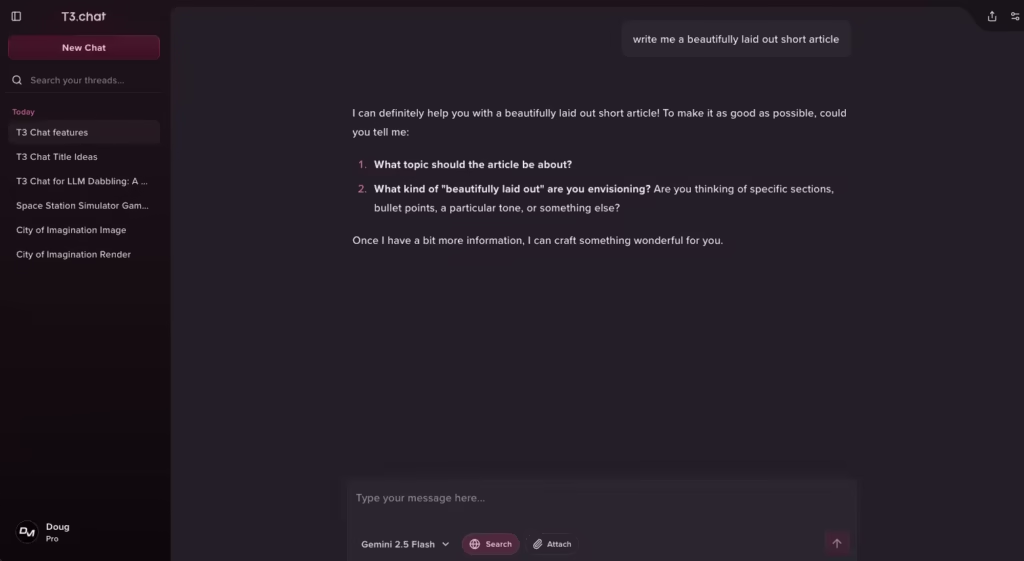

When you log into T3 Chat, you’re greeted with a clean, intuitive interface. No clutter. No upsells. Just a prompt box and a model selector. Here’s what you can do.

- Quick Prompts: Fire off a question and get a fast answer from your chosen model.

- Model Comparison: Run the same prompt through two or three different models and see how they stack up.

- Workspace Management: Organise your conversations by project, topic, or client. Everything stays where you left it.

- Token Tracking: See exactly how much compute you’re using. No surprises on your bill.

- Export Options: Save your favourite outputs, share them with collaborators, or drop them straight into your workflow.

It’s designed to get out of your way and let you focus on the work that matters.

Who T3 Chat Is For

T3 Chat isn’t just for developers or data scientists.It’s for anyone who wants reliable, flexible AI assistance without the friction—and it fits neatly into everyday workflows across roles.

For example, freelancers can draft proposals, repurpose copy, and polish client comms faster by comparing outputs and choosing the best tone for each deliverable; for practical guidance, see this overview of AI for freelancers and the Chartered Institute of Marketing’s perspective on responsible AI use in content to keep standards high.

Small teams can prototype ideas, document processes, and standardise messaging while keeping costs predictable—pair that with the UK’s National Cyber Security Centre advice on using generative AI safely to set sensible guardrails.

Researchers and students benefit from triangulating explanations across models while applying evidence‑first habits recommended in the PRISMA flow for literature reviews and university‑backed guidance on evaluating AI‑generated content.

Hobbyists and tinkerers can explore code, creative writing, or data tasks with low commitment, using hands-on prompting tips from a well-regarded prompt engineering guide to learn faster without vendor lock-in.

Who gets the most from T3 Chat in practice

- Freelancers and consultants who need to deliver high-quality work across domains—use multi-model comparison to match tone, structure, and depth to each brief, then validate facts with reputable sources like the BBC Academy’s editorial standards.

- Small teams looking for cost-effective AI tools that scale with their needs—combine model switching with lightweight process docs and adopt style consistency using resources such as the GOV.UK content style guide.

- Researchers and students exploring different models to understand their strengths—apply cross-model checking with evaluation frameworks like MQM for translation quality and keep a reading log with citations to primary sources.

- Hobbyists and tinkerers curious about what makes each AI tick—experiment with side-by-side prompts, then sanity-check results using language-specific docs like the Python tutorial or MDN Web Docs for JavaScript.

If you’ve ever thought, “I wish I could just try that other model without signing up for another service,” T3 Chat is your answer.Try a single prompt across multiple models, compare clarity and accuracy, and ship the version that works—no extra accounts, no tab-juggling, just focused output that fits your goals.

Frequently Asked Questions about T3 Chat

What models does T3 Chat support?

T3 Chat supports multiple leading LLM families, including:

Open‑source alternatives: Typically include high‑performing models like Llama‑family and Mistral‑family releases, which evolve quickly. See Meta’s Llama documentation and Mistral’s model catalogue for current checkpoints and licensing notes.

Claude: Strong for nuanced reasoning and safe, long-context writing. See Anthropic’s Claude overview for current capabilities and model variants.

GPT models: Versatile for coding, content, and agents. Check OpenAI’s model index for up-to-date GPT options and context limits.

Gemini: Noted for multimodal and multilingual tasks. Review Google’s Gemini models page for the latest tiers and features.

Do I need separate API keys for each model?

No separate keys are required.

T3 Chat manages provider integrations, authentication, and billing behind the scenes, so you just select a model and start prompting.

You also get centralised usage tracking in one place and can switch between Claude, GPT, Gemini, and open‑source models without reconfiguring credentials, reducing the need to store multiple third‑party keys across devices or teams.

If you prefer to bring your own key for a specific provider—for example, to align with enterprise billing or data residency policies—T3 Chat supports a BYOK setup that you can enable per workspace or per model while keeping everything else managed.

Can I compare responses from multiple models at once?

Yes—you can compare in real time.

T3 Chat lets you send a single prompt to multiple models concurrently, then view their outputs side by side in a unified pane so differences in clarity, depth, citations, and tone are immediately visible.

You can pin the strongest answer, highlight deltas, and request follow‑ups from any model without leaving the comparison view, which makes it easy to merge the best sections, ask one model to critique another’s answer, or generate a final synthesis that captures the strongest points from each.

Is there a free tier?

Yes—there’s a free option designed for light use, plus paid plans for heavier individual and team workloads.

T3 Chat’s tiers scale by usage limits and features, so you can start free to evaluate multi‑model comparisons and upgrade only if you need more capacity or collaboration tools.

For the latest allowances, inclusions, and regional pricing, check the pricing page directly, as plan names, credits, and limits are updated periodically to reflect new models and features.You can review the most up‑to‑date plan specifics on the same pricing page.

What happens if a model I use gets deprecated?

If a model is deprecated, your chats and prompts remain intact and you can switch to an alternative in the same interface without losing context or history. For context on why staged deprecations and migration paths matter, see this overview of model lifecycle management in production AI platforms.

T3 Chat will flag the change, suggest closest equivalents by capability and cost, and let you re-run recent prompts with the replacement model so you can validate parity before continuing uninterrupted.

For practical guidance on validating parity—latency, accuracy, and cost—this checklist for evaluating model upgrades is a useful companion.

Is my data private on T3 Chat?

Your data is private by default.

T3 Chat applies industry‑standard controls—transport‑layer and storage encryption aligned with recommendations like the NCSC’s guidance on protecting data in the cloud, workspace‑level access segregation, and role‑based permissions—and your prompts and outputs are not used to train external models unless you explicitly opt in.

This mirrors provider commitments documented in policies such as OpenAI’s stance on data usage and privacy for API inputs and Google’s approach to Gemini API data handling.

Provider routing is scoped to the model you select, with the minimum necessary metadata shared for inference only.Enterprises can enable data residency options to keep data within chosen regions, set retention controls consistent with organisational policy, and turn on audit logging for oversight and compliance.

If you bring your own key for a provider, traffic for that model is isolated under your account, following “BYOK” practices similar to customer‑managed keys, and logs are limited to operational diagnostics rather than content payloads.

For a broader overview of safe usage patterns, see the UK NCSC’s practical advice on using generative AI and LLM assistants safely.

Can I export my conversation history on T3 Chat?

Yes—you can export your conversation history in common, portable formats suitable for archiving and sharing.

You’ll be able to choose specific chats or whole workspaces, include attachments where applicable, and preserve timestamps and model metadata so replays and audits remain accurate; exports can then be re-imported or shared with collaborators without breaking thread structure.

How often are new models added to T3 Chat?

T3 Chat adds new models on a rolling basis, prioritising stability, quality, and cost‑effectiveness for real‑world use, with integrations typically following major provider releases after internal evaluation and safety checks.

When providers ship significant updates—such as OpenAI’s model refreshes in the official model index and release notes or Google’s Gemini models page—T3 Chat assesses capabilities, context limits, and pricing before enabling them in production, aligning with deployment guidance like the UK NCSC’s advice on using generative AI securely.

Announcements appear in the in‑app changelog and notices so you can scan what’s new, including context windows, latency expectations, and usage considerations.

If you want to experiment sooner, you can opt into early access for experimental models in settings—an approach consistent with staged rollout best practices discussed by Stanford CRFM’s notes on evaluating foundation models and the OECD AI policy observatory’s guidance on trustworthy AI adoption.

The Bottom Line

The future of AI isn’t about finding the one perfect model. It’s about having the right tool for the right moment. T3 Chat understands that. Instead of forcing you into a single ecosystem, it opens the door to a universe of options. You get flexibility without complexity.

You get power without vendor lock-in. You get to explore, experiment, and evolve as the technology does.

If you’re tired of juggling subscriptions, comparing screenshots, and wondering whether you’re using the best model for the job, T3 Chat is worth a look. It’s built for people who value choice, clarity, and control. And in a landscape that changes every week, that’s exactly what you need.